Dive Brief:

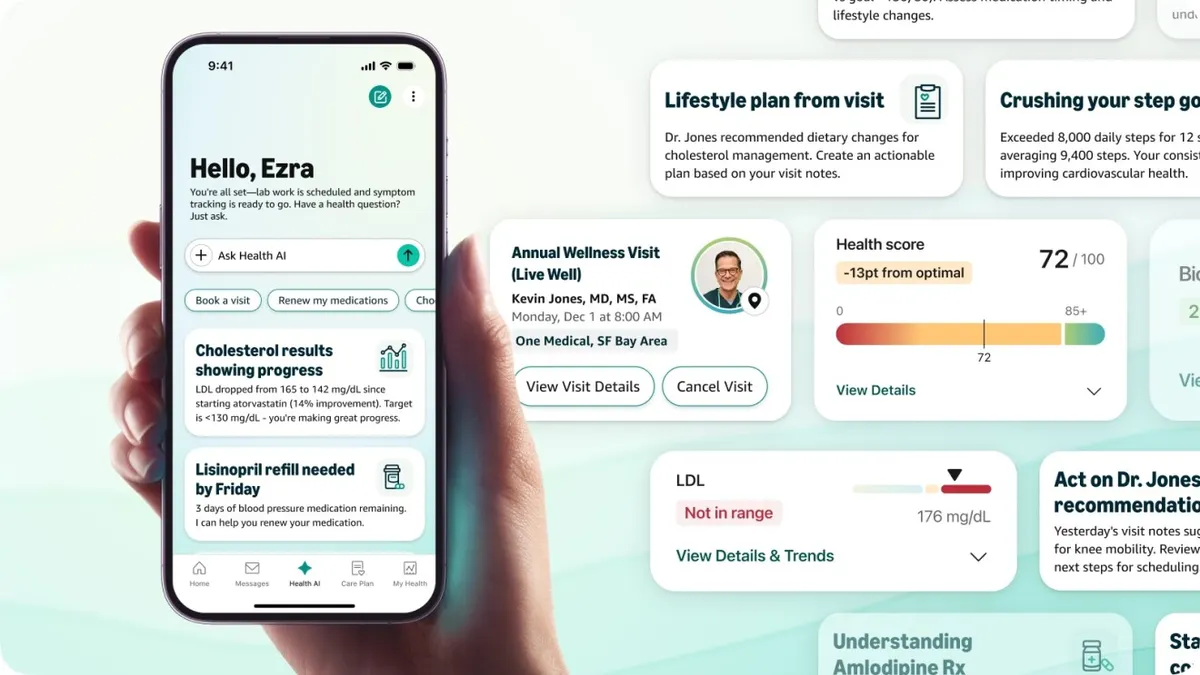

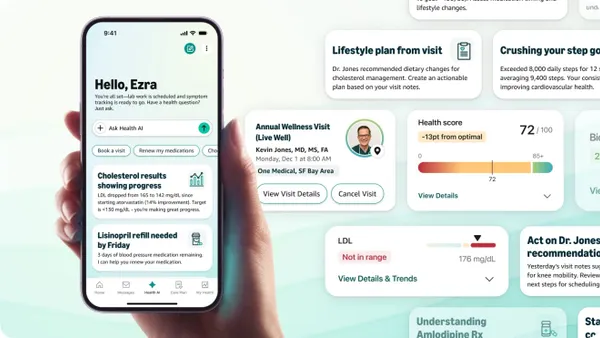

- Amazon is launching a health-focused artificial intelligence chatbot for members of its One Medical primary care chain, the tech giant said Wednesday.

- The Health AI assistant uses One Medical members’ medical record information to answer health questions and provide guidance on symptoms and potential treatments. Users can also chat with the assistant to book appointments, decide between care settings and renew prescriptions.

- The chatbot is built with “multiple patient safety guardrails,” including protocols that connect patients with a provider through messages or an in-person appointment when their clinical judgment is needed, an Amazon spokesperson said.

Dive Insight:

Amazon’s health chatbot offering comes on the heels of similar announcements by major AI firms in recent weeks. Earlier this month, OpenAI rolled out its own dedicated health information chatbot ChatGPT Health, while Anthropic launched a suite of healthcare AI tools, including functionality that allows users to connect their medical data and ask health questions.

Plenty of people are already turning to AI for healthcare queries. More than 40 million people ask ChatGPT healthcare questions each day, including questions about insurance, symptoms and treatment options, according to a report published in early January by OpenAI.

Amazon argues its offering, now available to One Medical members in the primary care chain’s app, has an edge over generic health information tools since users won’t need to upload health data from multiple providers.

“The U.S. health care experience is fragmented, with each provider seeing only parts of your health puzzle,” Neil Lindsay, senior vice president of Amazon Health Services, said in the announcement. “Health AI in the One Medical app brings together all the pieces of your personal health information to give you a more complete picture— helping you understand your health, and supporting you in getting the care you need to get and stay well.”

Still, the proliferation of AI chatbots for health guidance has raised some concern among experts, given the tools can hallucinate and give inaccurate or misleading information.

Patient safety nonprofit ECRI named misuse of AI chatbots as the top health technology hazard for 2026, noting the tools have suggested incorrect diagnoses, recommended unnecessary testing, promoted subpar medical supplies and invented body parts.

Amazon said its tool includes guardrails. For example, if a patient has recurring urinary tract infections, the assistant will recommend an in-person visit instead of virtual care.

And if a user asks about taking a new supplement, the chatbot will check current prescriptions, recent lab tests and health conditions and send complex interactions to a clinician.

Conversations are also marked with a label that warns the assistant occasionally makes mistakes and responses aren’t a substitute for a provider’s advice.

Additionally, Amazon said the assistant has strict privacy features, including encryption and controls over who can access medical records. The tech giant also noted conversations with the assistant aren’t automatically added to patients’ health records.